Computational Fluid Dynamics

Neko

Neko is a portable framework for high-order spectral element flow simulations. Written in modern Fortran, Neko adopts an object-oriented approach, allowing multi-tier abstractions of the solver stack and facilitating various hardware backends ranging from general-purpose processors, CUDA and HIP enabled accelerators to SX-Aurora vector processors. Neko has its roots in the spectral element code Nek5000 from Chicago/ANL, from where many of the namings, code structure, and numerical methods are adopted.

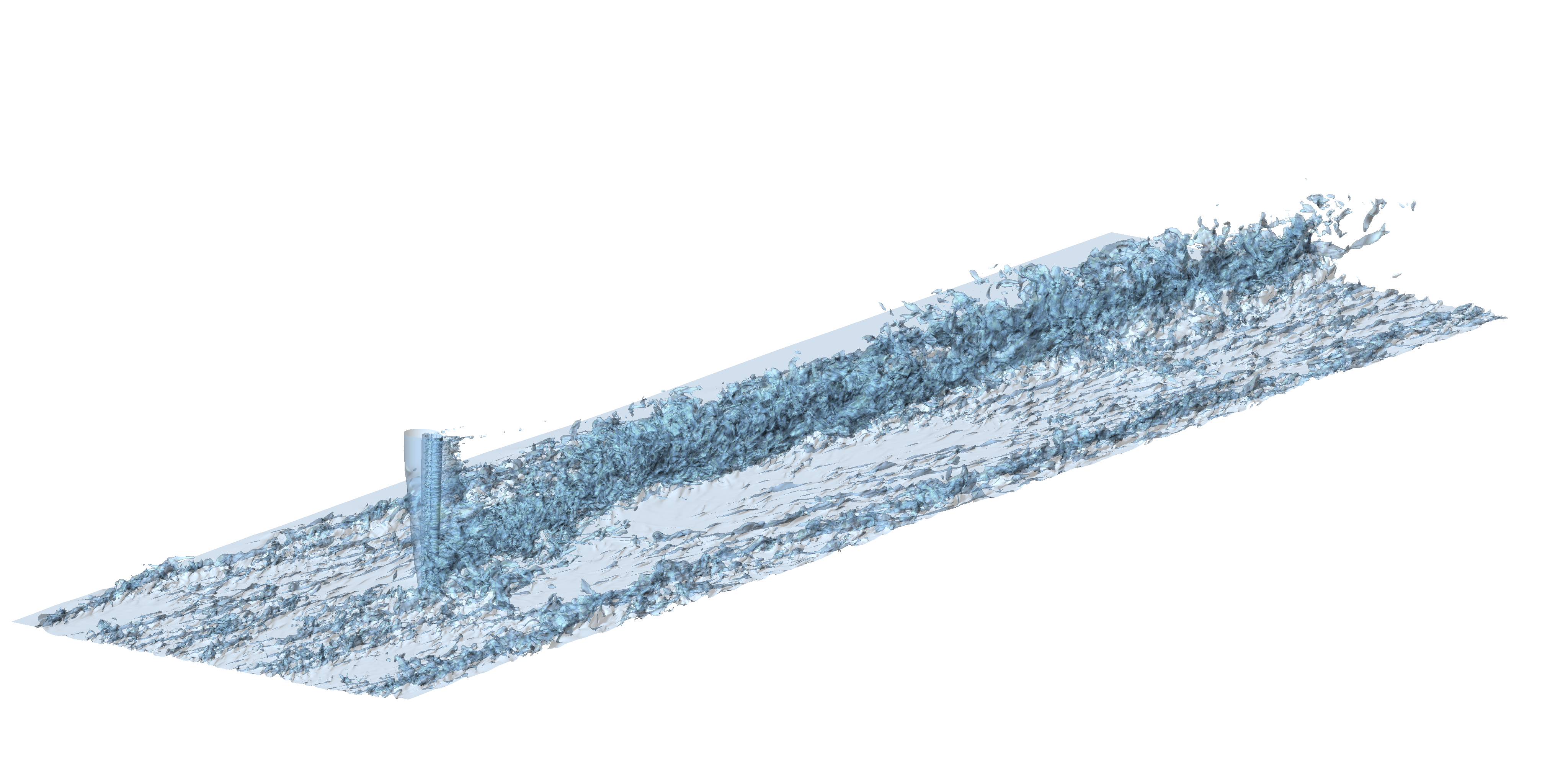

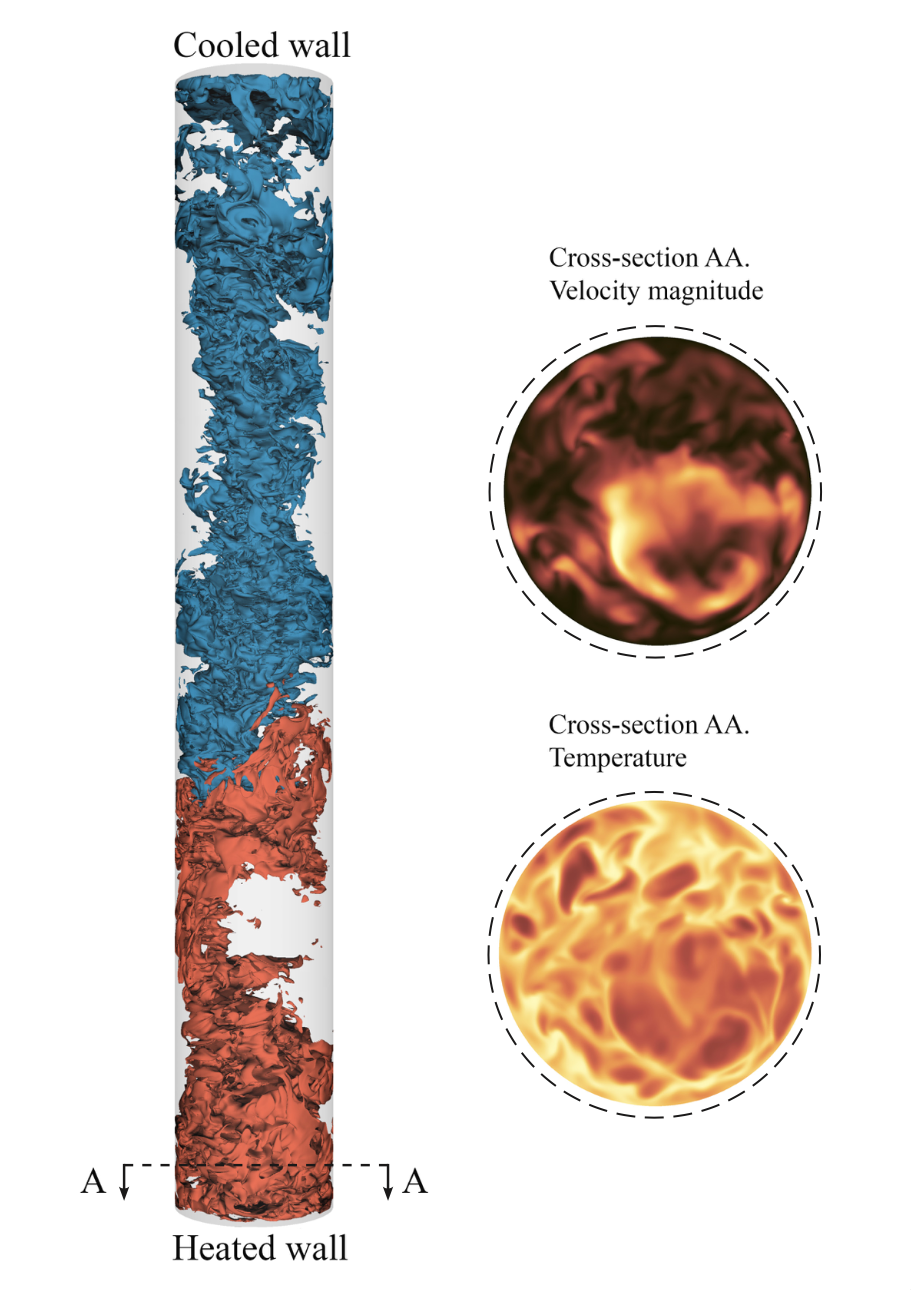

A milestone in the development of Neko came in 2023 when the Neko team was nominated to be Gordon Bell Prize finalist: Neko was used for large-scale simulations to explore the ultimate regime of turbulent Rayleigh–Bénard convection. This work was possible thanks to the technical work done in the DEEP-SEA project.

Computational Fluid Dynamics in the first DEEP project

AVBP is a parallel Computational Fluid Dynamic (CFD) code that studies the combustion process in gas turbines, targeting its optimisation, impacting stability and pollution reduction. The AVBP code has been ported to all major systems (SGI Altix ICE, CRAY XT4, IBM BlueGene/Q) with excellent performance. However, keeping a good level of scalability and performance on the upcoming HPC systems is challenging: CFD requires all computing cores to communicate frequently, and the physical models often require reduced variables (max/min/mean) over the complete computing partition.

In the DEEP project, CERFACS (the European Centre for Research and Advanced Training in Scientific Computation) aimed at improving AVBP’s scalability by taking advantage of the DEEP Cluster-Booster architecture. In order to do that, the bottlenecks caused by the original master/slave approach were removed as a first step. The next step was migrating from a pure MPI approach to a hybrid approach of MPI+OmpSs. The OmpSs model allows exposing additional parallelism, and by using the task-based model, it was possible to implement a version of the application that outperformed and outscaled the previous one. Loop refactoring and compiler hints gave an extra edge in performance, as now the vector units are used more efficiently. Lastly, the I/O operations have been offloaded, together with costly global reductions that hindered the scalability of the application, and that were then performed in an overlapped manner in the Cluster, while the simulation continued on the Booster.