DEEP System

The DEEP system is a prototype modular supercomputer, which was designed & installed within the EU-funded DEEP-EST project. It serves as a testbed for new system components and a platform for SW development by the SEA projects.

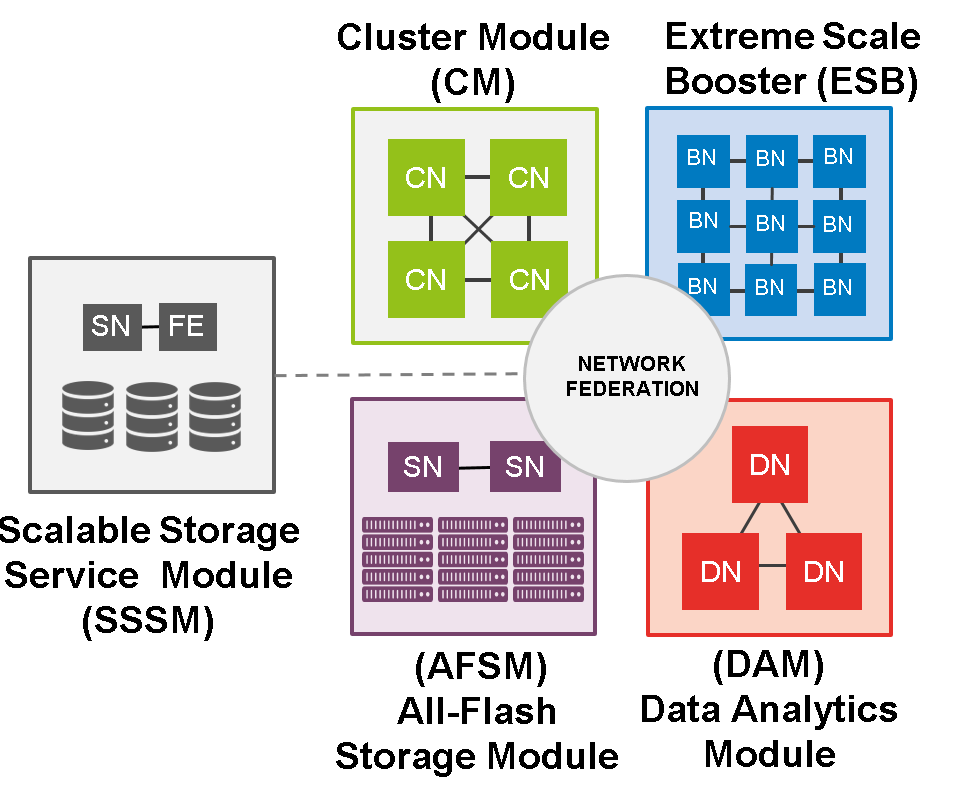

As shown in Figure 1, the DEEP system includes three compute modules and two high-performance storage modules:

- Cluster Module (CM) – 50 dual-processor nodes using Intel® Xeon® 6146 processors optimized for high single-thread performance

- Extreme Scale Booster (ESB) – 75 single processor/GPGPU nodes using one Intel® Xeon® 4215 processor for I/O and coordination tasks and one NVIDIA Tesla V100 GPGPU for compute

- Data Analytics Module (DAM) – 16 dual-processor nodes using Intel® Xeon® 8260M processors, and one or two NVIDIA Tesla V100 GPGPU. 4 of the nodes include Intel® Stratix® 10 FPGA accelerators; all nodes are equipped with 3 TB of Intel® OptaneTM persistent memory

- Scalable Storage Service Module (SSSM) with a total of 292 TB of hard-disk storage running the BeeGFS parallel file system

- All-Flash Storage Module (AFSM) with a total of 2016 TB of NVMe storage running the BeeGFS parallel file system.

According to the concept of the Modular Supercomputing Architecture, workloads (or parts of workloads) with high demands on single-thread performance and limited scalability are placed on the CM, while highly scalable codes run on the “lean” nodes of the ESB, which provide highly parallel and efficient compute capability via their GPGPUs. The ESB CPUs were carefully selected to just perform I/O and coordination tasks with optimal energy efficiency. The DAM module is used by applications focusing on data analytics or ML with high demands on memory capacity and/or use of an FPGA accelerator. Details about the compute modules can be found in the table at the end of this document.

As a recent addition, 4 compute nodes connected by the BXI-2 fabric from Atos were added to the system. These use 4 CPUs.

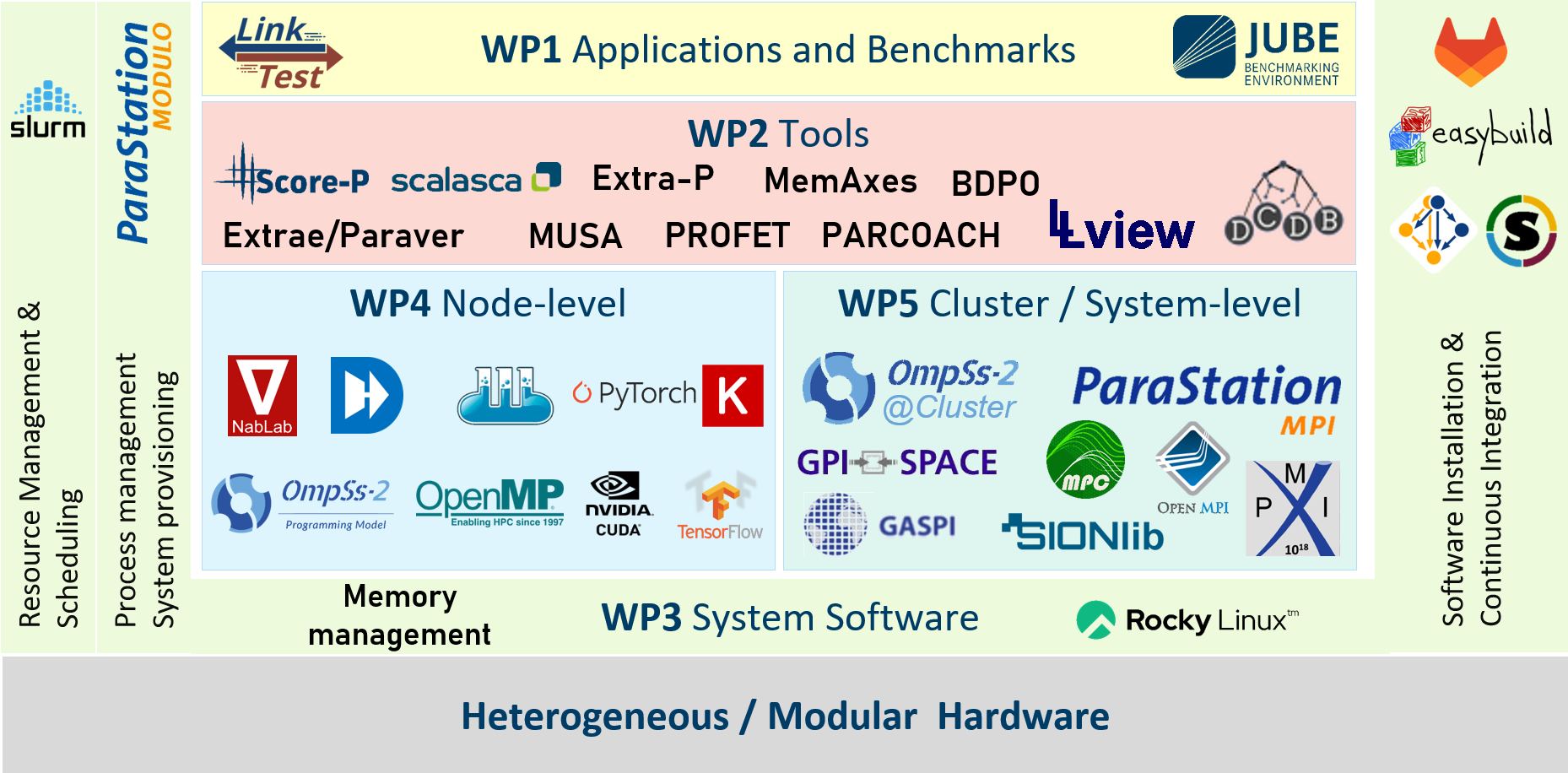

The DEEP system currently runs the Rocky 8.6 operating system and uses Slurm as the batch scheduler. ParTec’s ParaStation Modulo software suite handles resource provision and management, and ParaStation MPI handles high performance communication within and between modules in a transparent way. Easybuild is used to manage installation of SW packages and tools, including those developed in the DEEP-SEA project. The full SW stack as developed by the DEEP-SEA project is shown belowin Figure 2.

Cluster Module

Usage/design target: Applications and code parts with limited scalability requiring high single-thread performance and a modest amount of memory

Number of nodes: 50

CPUs per node: 2 × Intel® Xeon® 6146

CPU generation: “Skylake”

CPU cores & frequency: 2× 12 @3.2 GHz

Accelerators per node: None

Memory capacity: 192 GB DDR4

Accelerator memory: None

Persistent memory: None

Memory BW per node: 256 GB/s

Local storage: 1 × 512 GB NVMe SSD

Interconnect fabric: EDR-IB (100 Gb/s)

Interconnect topology: Fat tree

Power envelope per node: 500 W

Cooling: Warm-water

Integration: 1 × Rack MEGWARE SlideSX®-LC ColdCon

Booster Module

Usage/design target: Compute intensive applications and code parts with regular control and data structures, with high scalability

Number of nodes: 75

CPUs per node: 1 × Intel® Xeon® 4215

CPU generation: “Cascade Lake”

CPU cores & frequency: 8 @2.5 GHz

Accelerators per node: 1 × NVIDIA Tesla V100

Memory capacity: 48 GB DDR4

Accelerator memory: 32 GB HBM

Persistent memory: None

Memory BW per node: 900 GB/s (GPGPU)

Local storage: 1 × 512 GB NVMe SSD

Interconnect fabric: EDR-IB (100 Gb/s)

Interconnect topology: Tree

Power envelope per node: 500 W

Cooling: Warm-water

Integration: 3 × Rack MEGWARE SlideSX®-LC ColdCon

Data Analytics Module

Usage/design target: Data-intensive analytics and ML applications and code parts requiring large memory capacity, data streaming, bit- or small datatype processing

Number of nodes: 16

CPUs per node: 2 × Intel® Xeon® 8260M

CPU generation: “Cascade Lake”

CPU cores & frequency: 2× 24 @2.4 GHz

Accelerators per node: 1 × NVIDIA Tesla V100, 1 × Intel® Stratix® 10

Memory capacity: 384 GB DDR4

Accelerator memory: 32 GB HBM (GPGPU), 32 GB DDR4 (FPGA)

Persistent memory: 3 TB Intel® OptaneTM

Memory BW per node: 900 GB/s (GPGPU)

Local storage: 2 × 1.5 TB NVMe SSD

Interconnect fabric: EDR-IB (100 Gb/s)

Interconnect topology: Tree

Power envelope per node: 1600W

Cooling: Air

Integration: 1 × Rack MEGWARE