Seismic Imaging

Seismic imaging aims at providing accurate and detailed 3D maps of the earth subsurface from acoustic wave propagation recording at the surface.

The full waveform inversion (FWI) is a geophysical inverse problem that uses the adjoin method to pose an iterative improvement of the physical model, typically parameterised in terms of wave velocity and density. As such, during the optimisation process several reverse time migrations (RTM) are performed. Two implementations of the RTM will be used in DEEP-SEA: the Barcelona Subsurface Imaging Tools (BSIT) based on MPI and OpenMP (or CUDA), and the Fraunhofer Reverse Time Migration (FRTM) based on GASPI and GPI-Space.

Within the project framework, a BSIT-like version will be used, namely a mock-up benchmark. The application uses different parallelisation strategies at different levels: MPI and OpenMP (or CUDA) at kernel level and a master-worker scheme for scheduling the complex inversion workflow. The code has been optimised for different specific hardware architectures but is limited by data access, both at kernel level (memory-bound, low operational intensity), non-persistent storage and global file access. In the scope of the DEEP-SEA improvements in these fields will be achieved by using the strategies proposed in the work package “Software Architecture and System Software“ (WP3). More specifically, this application will benefit from the analysis of different memory strategies on novel architectures (e.g., Post-K Fujitsu, Cascade lake with Optane, servers with heterogeneous memories).

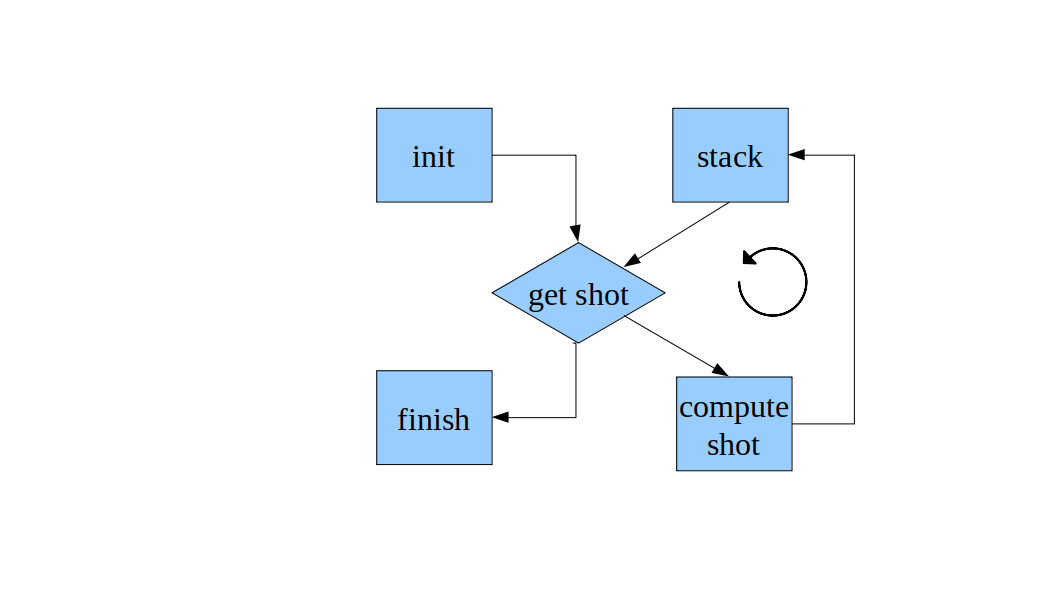

The Fraunhofer Reverse Time Migration (FRTM) implements the method of Reverse Time Migration (RTM) which images seismic data and is based on the finite difference time domain discretization of the full wave equation. It is employed for oil & gas exploration and allows for accurate imaging of complex subsurface structures. FRTM implements the RTM method in a robust and massively parallel way by using proprietary HPC tools like the PGAS communication API GPI-2 and the task based scheduler ACE, both developed at Fraunhofer ITWM. As such, it combines the necessary degree of accuracy with ultimate efficiency and scales over almost three orders of magnitude.

FHG’s FRTM will benefit from the extensions to GASPI and GPI-Space developed in the work package “System-level programming” (WP5). Namely, the RTM will use the resilience measures of GPI and exploit the malleability features implemented by the Slurm scheduler and presented to the application developer by GPI and GPI-Space. In the scope of DEEP-SEA, the performance improvements expected by using alternative memory architectures as well as the data allocation and migration strategies proposed by the WP3 tools will be analysed. Currently, a multithreaded CPU version of the code and an FPGA kernel for the stencil computation exist. In order to take advantage of more heterogeneity, a GPU version of FRTM will be implemented to run on the Booster module of the DEEP-EST prototype. The support of heterogeneous systems (especially the GPU implementation) will increase the competitiveness of the commercial FRTM application.

Seismic Imaging in the first DEEP project

In our first project DEEP, the French company CGG applications portfolio and geophysical research activities provided samples of seismic imaging codes representative of current and future trends in the oil and gas industry. From a programming perspective, those codes were both very compute and I/O intensive, but were most of the times featuring enough intrinsic parallelism to make them good candidates for large scale parallelisation.

The DEEP Architecture allowed to study the level of scalability of CGG’s key applications by re-engineering the codes to take advantage of the vector capabilities, the large number of cores, and the high memory bandwidth of the Booster Nodes, while at the same time the Cluster was leveraged to perform large I/O operations and accumulating the results of the computations run on the Booster. This way the ratio of Xeon Phi coprocessors and Xeon nodes was highly dynamic, allowing CGG to minimize the amount of idling resources.