EcoHMEM

Hybrid memory systems are an emerging trend to provide larger RAM sizes at reasonable cost and energy consumption. Byte-addressable persistent memory (PMEM) technology offers better latency than NAND technologies (e.g. SSD devices) and higher capacity than DRAMs. Hence, providing many opportunities for optimizing application performance. Within the DEEP projects, we developed the ecoHMEM framework that is able to exploit the different capabilities of the underlying memory infrastructure without making any modifications to the application code.

Methodology

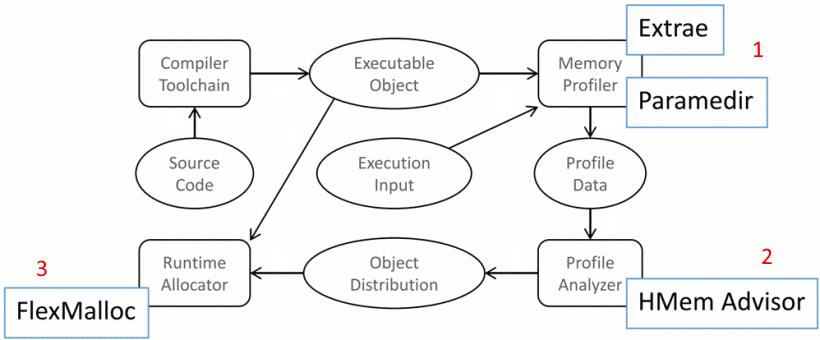

The EcoHMEM framework optimizes applications by minimizing CPU cycles caused by L3 cache misses. To this end, it consists of three main steps:

- Profiling Run: Using the Extrae and Paramedir tools to collect profiling data in the granularity of objects.

- Data Placement: The HMEM Advisor component makes use of the profiling data to decide an initial data distribution.

- Production Run: The application is launched while the Flexmalloc Allocator is preloaded into the execution environment. This allocator controls the allocation of dynamic objects, honoring the recommended distribution generated in Step-2.

Binary Object Matching (BOM)

The profiler in our framework translates the call-stack frames into a binary form consisting of the binary object that contains the frame address and the offset from the base address of the binary object. When leveraging BOM, during the process initialization the library obtains the base address where each shared-library is loaded in memory, and calculates the absolute addresses for each frame of every call-stack. When the process invokes a heap memory call, it is intercepted by FlexMalloc, where the routine parameters and the call-stack are captured.

Bandwidth-aware Object Placement

Implemented as a post-processing step in the Advisor (Step-2 of the Methodology) after traditional classification. This algorithm receives as

input a set of objects already classified for placement in DRAM or PMem using our access density based algorithm and further divides these into

groups using additional criteria. It consists of two steps:

- Categorization: The objective is to find objects currently in DRAM that experience low bandwidth demand. These may either be moved to PMem directly or act as a replacement candidate for other objects currently assigned to PMem. It also identifies objects in PMem that experience high bandwidth demand and would benefit if placed intoDRAM.

- Placement: This step assigns objects categorized during step 1 to DRAM and PMEM.