JUBE

Automating benchmarks is important to guarantee reproducibility and comparability which is the major intent when performing benchmarks. Furthermore, managing different combinations of parameters is error-prone and often results in significant amounts of work especially if the parameter space gets large.

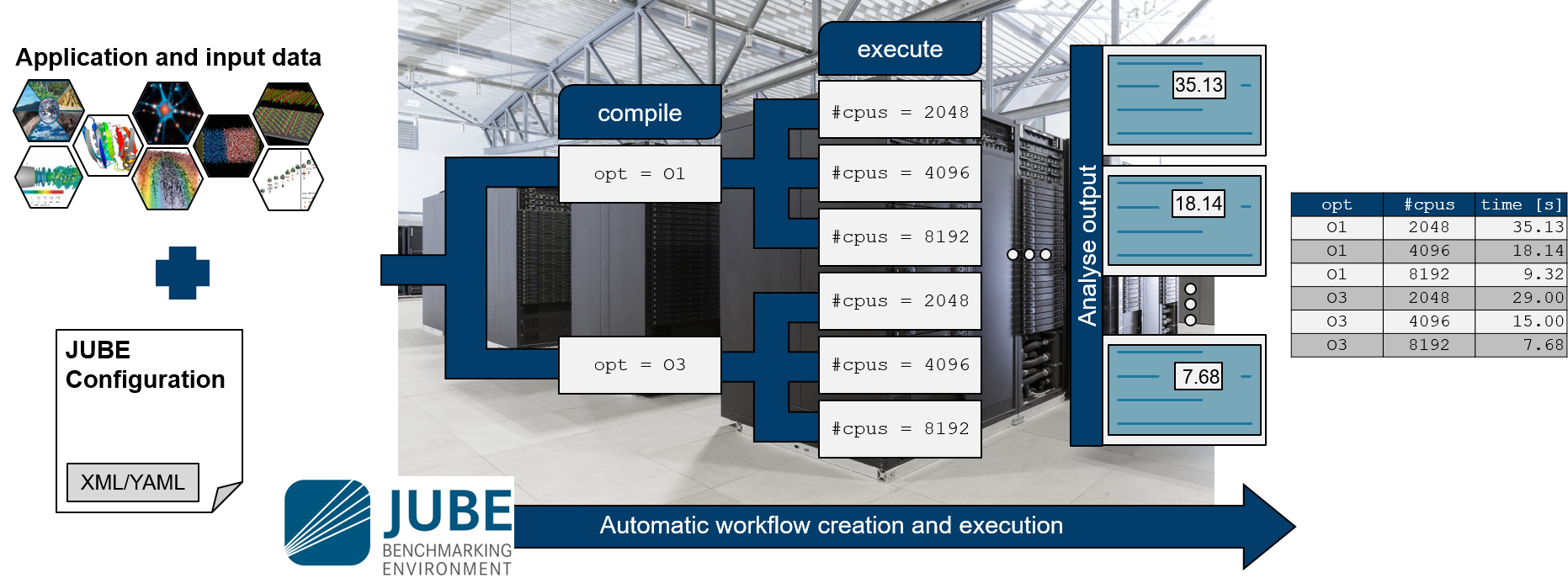

In order to alleviate these problems, the Jülich Benchmarking Environment (JUBE) helps to execute and analyse benchmarks in a systematic way. It is based on XML or yaml-based workflows descriptions that cover the preparation of environment, pre-processing, job submission, post processing and results extraction and analysis. Workflow steps that depend on parameters are evaluated automatically for each parameter combination and executed in parallel if so desired. Figure 1 illustrates the principle of operation.

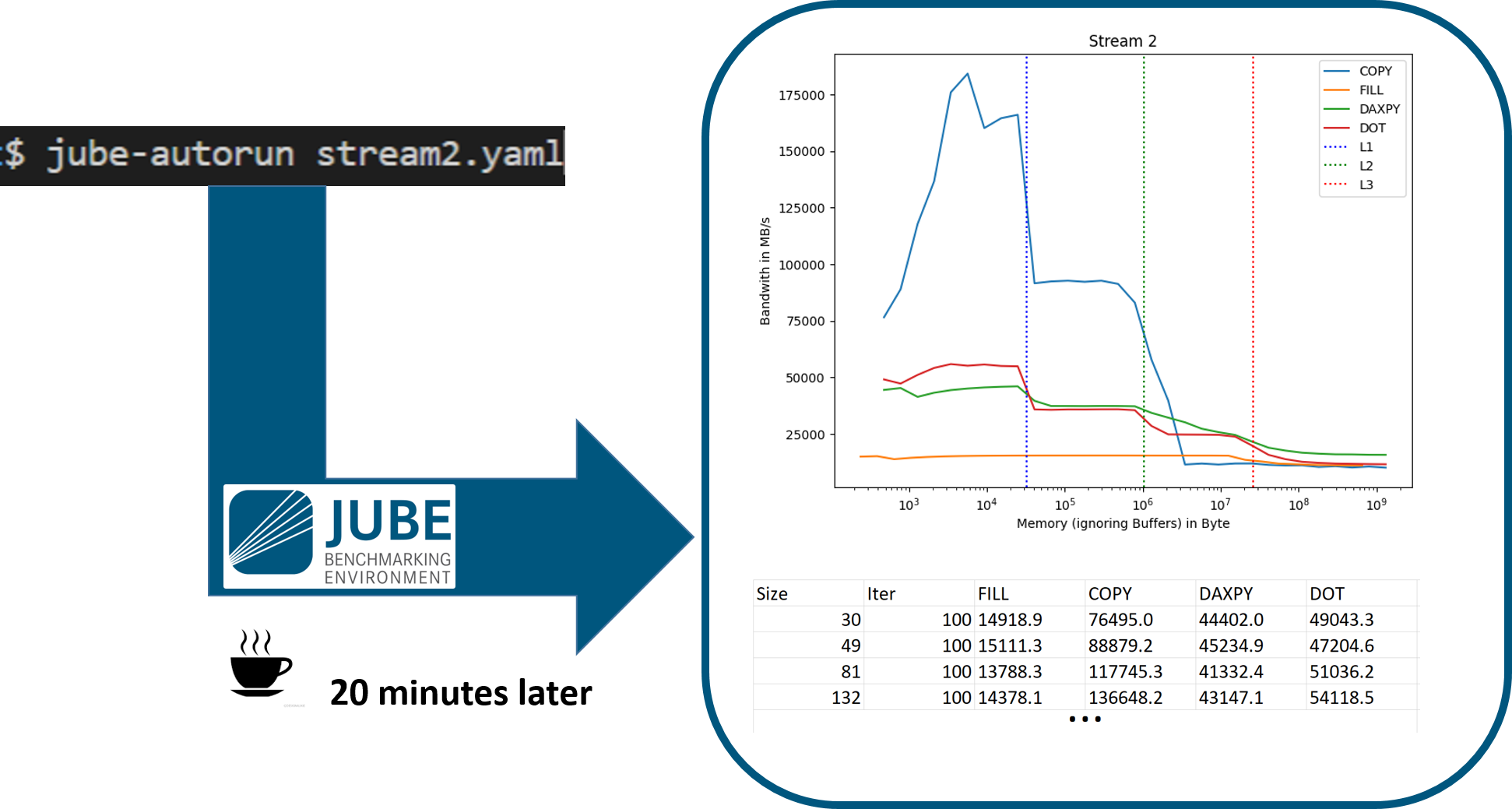

In DEEP-SEA JUBE provides a uniform entry point for all benchmarks. No matter the complexity of the setup behind, every benchmark can be run by a jube run ’command.

Frequent interchange of ideas for improvement between DEEP SEA application developers and benchmarking experts and the JUBE developer Team has led to improvements being released in time for the project to profit from it. For example, recent updates have improved the parallel execution of workflow steps. A “dupclicate” keyword was introduced that allows for easier CLI manipulation of parameter sets.