Software

DEEP-SEA: Adapting all levels of the software stack

The 4th member of the DEEP project series focusses on the question how future exascale systems can be used in an easy – or partly automated – manner, while at the same time being as energy efficient as possible.

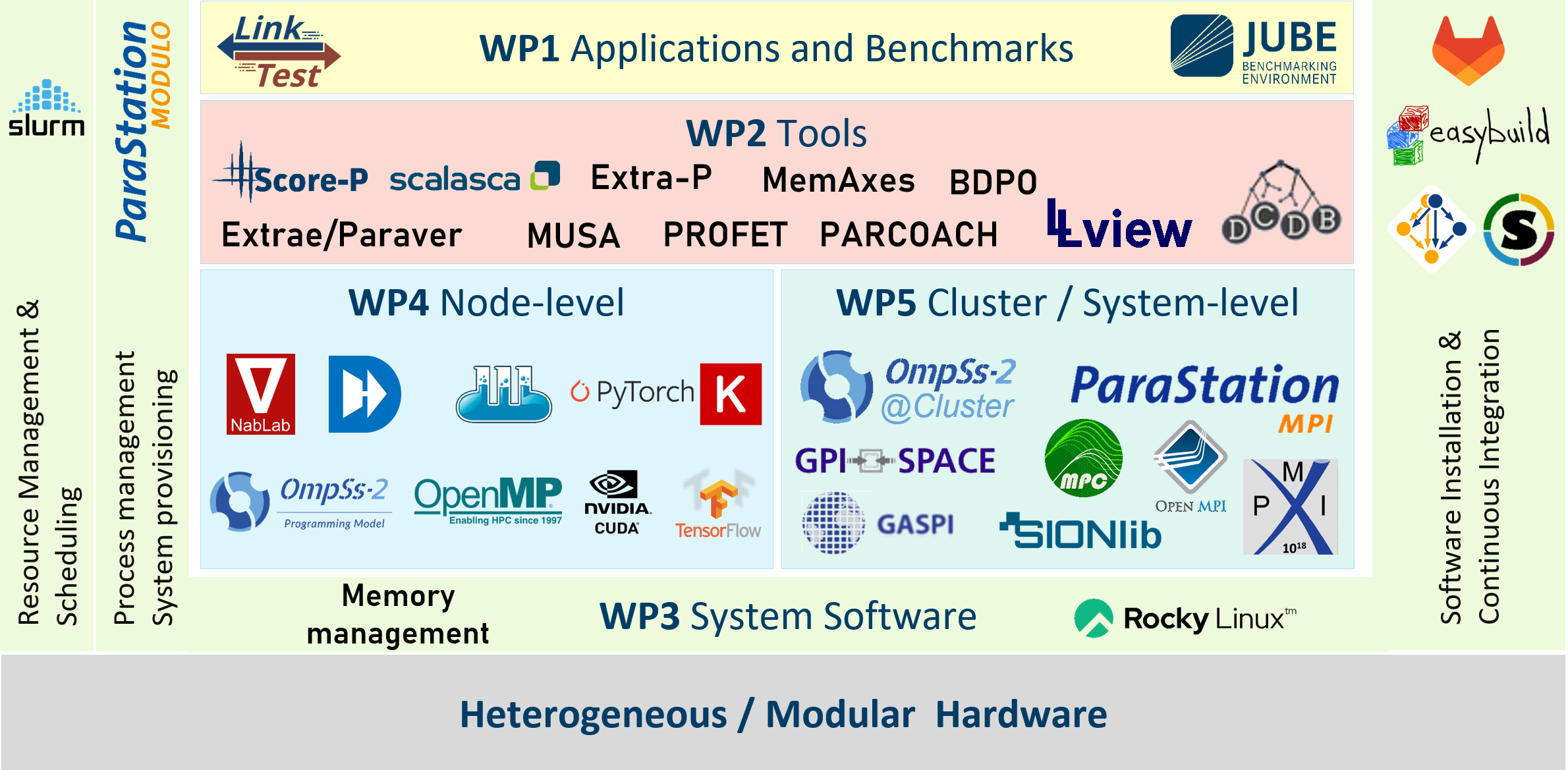

Systems and applications are rapidly getting more complex. To make the best use of the available resources, these must be dynamically assigned according to the needs of the application. Furthermore, programming models and tools must enable efficient sharing and exploitation of the heterogeneous computing capabilities. DEEP-SEA will adapt all levels.

DEEP-EST: Resource management & job scheduling

In the DEEP-EST project the focus in system software development lied with resource management and job scheduling. The developments in this area enabled to determine optimal resource allocations for each combination of workloads, supported adaptive scheduling and enabled dynamical reservation of the resources. Added to the modularity of the system itself, this guaranteed maximum usage of the overall system, since no component is “blocked” for an application if it is not utilised.

Furthermore, the DEEP‑EST unified programming environment, based on standard components such as MPI and OmpSs but also on the emerging programming paradigms of the data-analytic fields like map-reduce, provided a model that fully supports applications to use combinations of heterogeneous nodes, and enables developers to easily adapt and optimise their codes while keeping them fully portable.

There is growing scientific demand to use NEST also to model networks of neurons with more complex internal dynamics, e.g., being highly non-linear. This will require much greater computing power. Another challenging aspect is the very large amount of data generated by large-scale network simulations. Today, this data is usually written to file and analysed later offline, but this approach is often inefficient.

Significant progress in theory and simulation allows us today to make detailed, biophysically correct predictions of Local field potentials (LFP) signals in brain models.

In the DEEP-EST project we used a workflow, where spikes are streamed directly from NEST point-neuron simulations into compartmental neuron simulations with the Arbor simulation package. Arbor is currently under development in a collaboration between the SimLab Neuroscience at Jülich Supercomputing Centre (JSC) and CSCS, the Swiss Supercomputing Centre, as part of their activities in the Human Brain Project.

Programming Environment

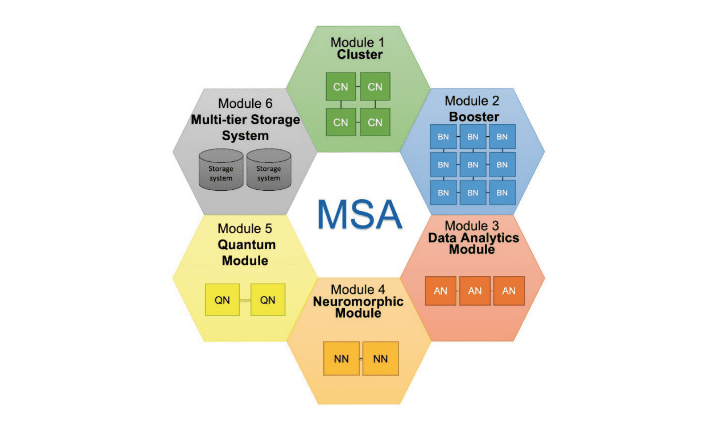

Driven by co-design, DEEP-SEA aims at shaping the programming environment for the next generation of supercomputers, and specifically for the Modular Supercomputing Architecture (MSA). To achieve this goal, all levels of the programming environment are considered.

Benchmarking and Tools

Benchmarking is an essential element in evaluating the success of a hardware prototyping project. In the DEEP projects we use the JUBE benchmarking environment to assess the performance of the DEEP system.

Monitoring

Monitoring tools play an important role in the DEEP projects. Different tools are used for successful monitoring.